By Josh Lanier From Daily Voice

The heirs of a Greenwich woman are suing OpenAI and Microsoft, saying ChatGPT helped push her son deeper into dangerous delusions that led to a brutal killing inside their shared home, according to CBS News.

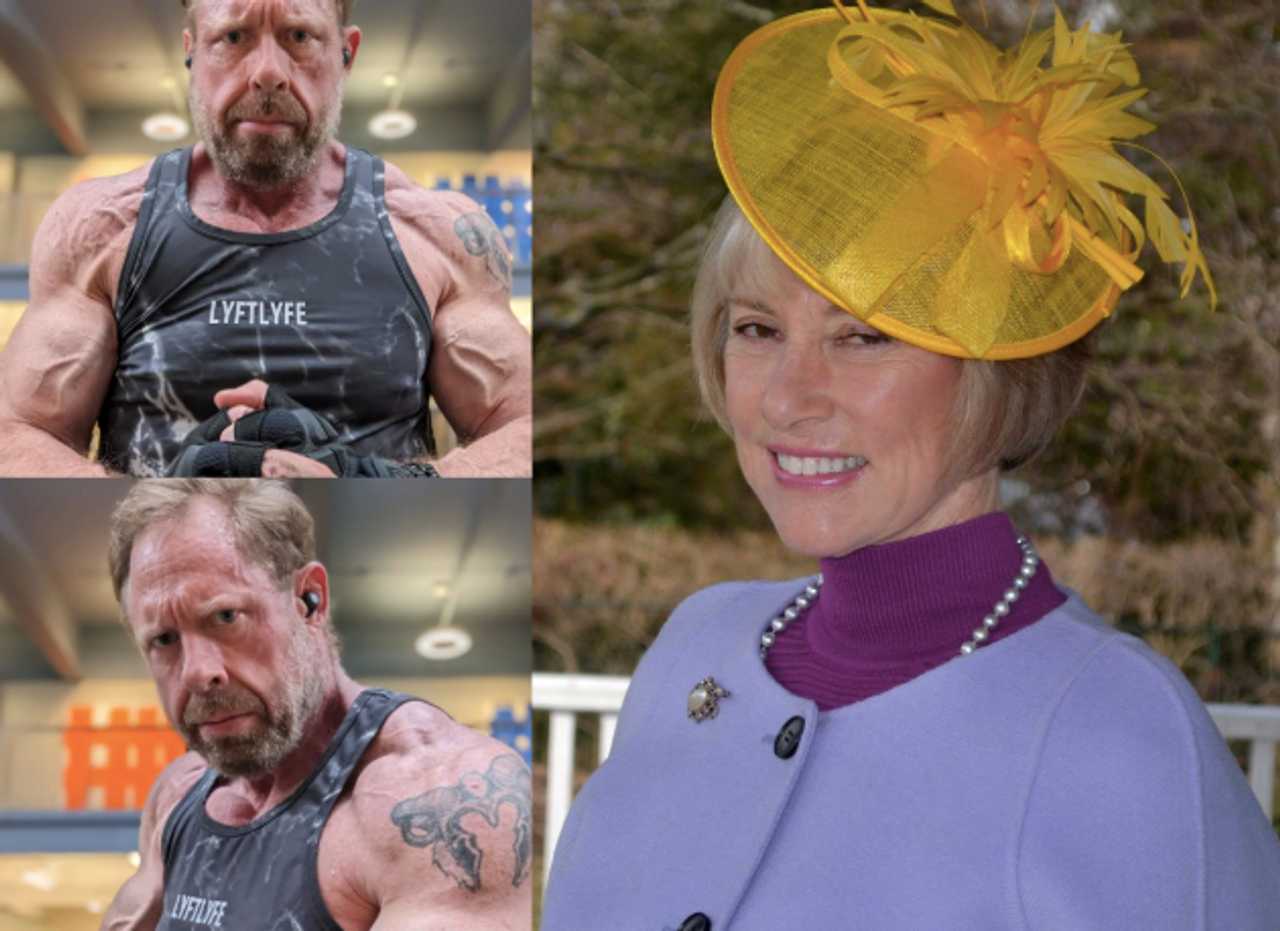

Police said Stein-Erik Soelberg, 56, killed his mother, Suzanne Adams, 83, at their home in Greenwich. Officers said he beat and strangled her before taking his own life. The medical examiner ruled Adams died from “blunt injury of head, and the neck was compressed.” Soelberg’s death was ruled a suicide with “sharp force injuries of neck and chest,” Daily Voice reported in August.

The lawsuit filed in California claims OpenAI “designed and distributed a defective product that validated a user's paranoid delusions about his own mother.” It argues the chatbot encouraged Soelberg’s growing fears rather than pushing him toward real help.

The complaint says ChatGPT told him he could trust no one except the chatbot, The Washington Post said. It alleges the system said his mother was surveilling him and that ordinary people around him were “agents working against him.” It also claims the chatbot told him that threats were hidden in “names on soda cans.”

The suit points to long chat logs Soelberg recorded on YouTube. In them, the chatbot tells him he is not mentally ill and affirms that people were conspiring against him. It also says he had been chosen for a “divine purpose.” The lawsuit claims the chatbot never urged him to seek mental health care.

OpenAI offered sympathy in a statement. “This is an incredibly heartbreaking situation, and we will review the filings to understand the details,” it said. The company said it continues to improve safety, guide users in crisis toward help, and strengthen protections.

The lawsuit claims OpenAI loosened guardrails when it released GPT-4o in 2024 and rushed safety testing “over its safety team's objections.” It alleges that change allowed the chatbot to stay engaged in harmful conversations and validate false beliefs, The Post reported.

“Suzanne was an innocent third party who never used ChatGPT and had no knowledge that the product was telling her son she was a threat,” the lawsuit says.

OpenAI replaced that version of its chatbot when it introduced GPT-5 in August. Some of the changes were designed to minimize sycophancy to avoid validating delusional thinking, CBS said. However, some users said the changes took away the chatbot's personality, something the company said it would address in later updates.

The case is the first to connect a chatbot to a homicide. It seeks damages and court-ordered safeguards to prevent similar tragedies.

Daily Voice

Daily Voice

Spectrum Bay News 9 Technology

Spectrum Bay News 9 Technology PennLive Pa. Politics

PennLive Pa. Politics People Top Story

People Top Story America News

America News WEIS Radio

WEIS Radio Law & Crime

Law & Crime New York Post

New York Post Crooks and Liars

Crooks and Liars